I recently worked on a project to optimize a Docker image for a node app and address some problems we'd been experiencing. We use Buildkite, and the Buildkite agents were having out of memory problems during the step that builds the image. Also, the image seemed too big at 2.7GB.

Briefly, here are some of the things I learned during my investigation:

- Use layering to avoid unnecessarily re-running steps. For example, if you copy over the

package.json file and run install before copying over everything else and running

yarn build, Docker will only re-install them if the package.json has changed. If, on the other hand, you first copy over everything, Docker will run install every time any file changes, regardless of whether it's actually needed. - Use multistage builds: the Docker image will only be as large as it needs to be to contain the precise files that you need.

- Consider using turbo prune Assuming you work in a monorepo, this will generate a folder with just the files you need.

- Consider using the

output: 'standalone'option innext.config.js, which creates a folder with just the files you need (see docs)

I didn't use Turbo or the output: 'standalone' option, although I'd like to explore these in a

follow-up phase. Instead, I built the app in a seperate buildkite pipeline step prior to the Docker

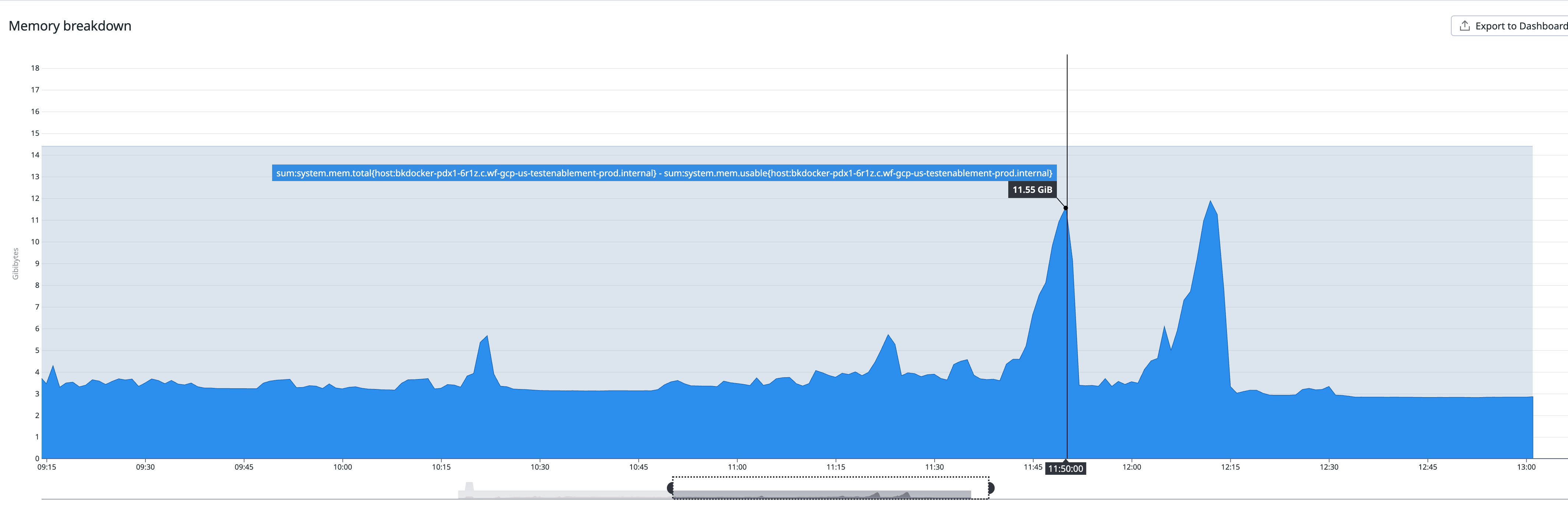

build step. While I'm not positive this is the best solution, it does seem to be correlated with

reducing memory usage of the Docker build step (see images below).

After building the app, we use a multistage build to, first, import the app, then remove all the

parts we don't need for production (src files, etc), then copy just what we need into the final

stage.

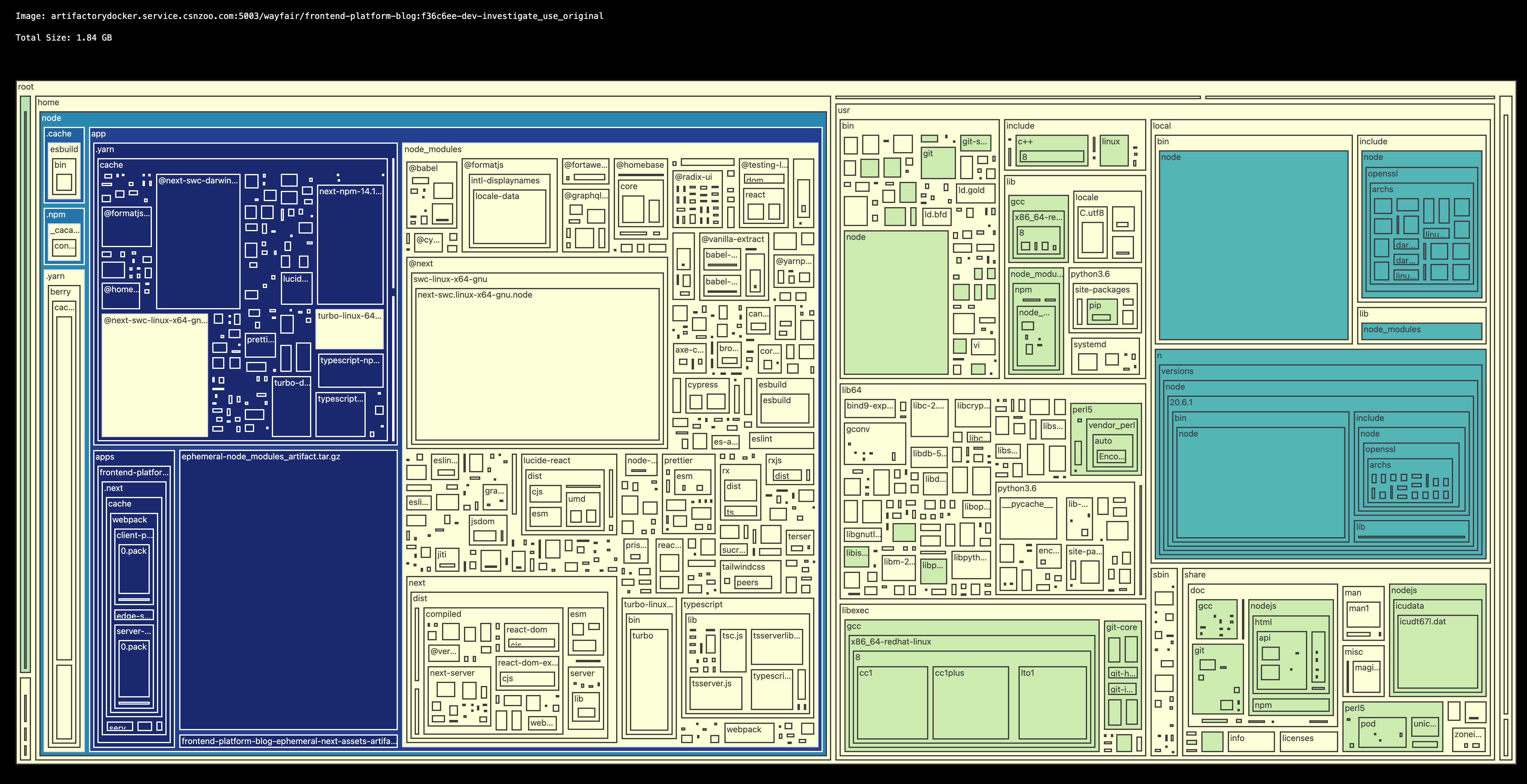

At the end of this process, our Docker image was down from nearly 2.7GB to more like 1.8GB. Here's a Dockerimage that shows our basic approach:

# First of two stages of the multistage Dockerfile

FROM nodeBaseImage as installer

WORKDIR /home/node/app

# Copy package.json and install in a layer before other layers

COPY ./package.json package.json

RUN yarn --immutable

COPY . .

# The tools and libs folders contain many sub-folders that we don't need; this command removes themn

RUN find tools/ libs/ -type d \( -name src -o -name translations -o -name cypress -o -name .storybook -o -name .turbo \) ! -path "*/dist/*" -exec rm -rf {} +

# Delete all the files in tools and libs except those in the dist folders

RUN find tools/ libs/ -type f ! -path "*/dist/*" -exec rm -f {} +

# Set the user to be node for security reasons.

# This is a best practice per https://github.com/nodejs/docker-node/blob/main/docs/BestPractices.md#non-root-user

USER node

# Make a second stage

FROM nodeBaseImage

WORKDIR /home/node/app

COPY --from=installer home/node/node_modules node_modules

COPY --from=installer home/node/apps/my-app apps/my-app

EXPOSE 3000

CMD [ "yarn", "workspace", "@namespace/app-name", "next"]

Some useful resources:

- docker-phobia, which is basically like WebpackBundleAnalyzer for Docker.

- docker-dive, a tool for exploring the image in terms of its layers.

Screenshots

Docker phobia

Docker phobia

Memory usage on Builkite agent

Memory usage on Builkite agent